Running 50 on one machine, four on my fileserver and another on a hacked up hp eliteone (no screen) which runs my 3d printer. Believe my immich container is a nspawn under nixos too.

Some are a wip but the majority are in use. Mostly internal services with a couple internet facing, I’ve got a good backlog of work to do on some with some refactoring my nixos configs for many too 😅.

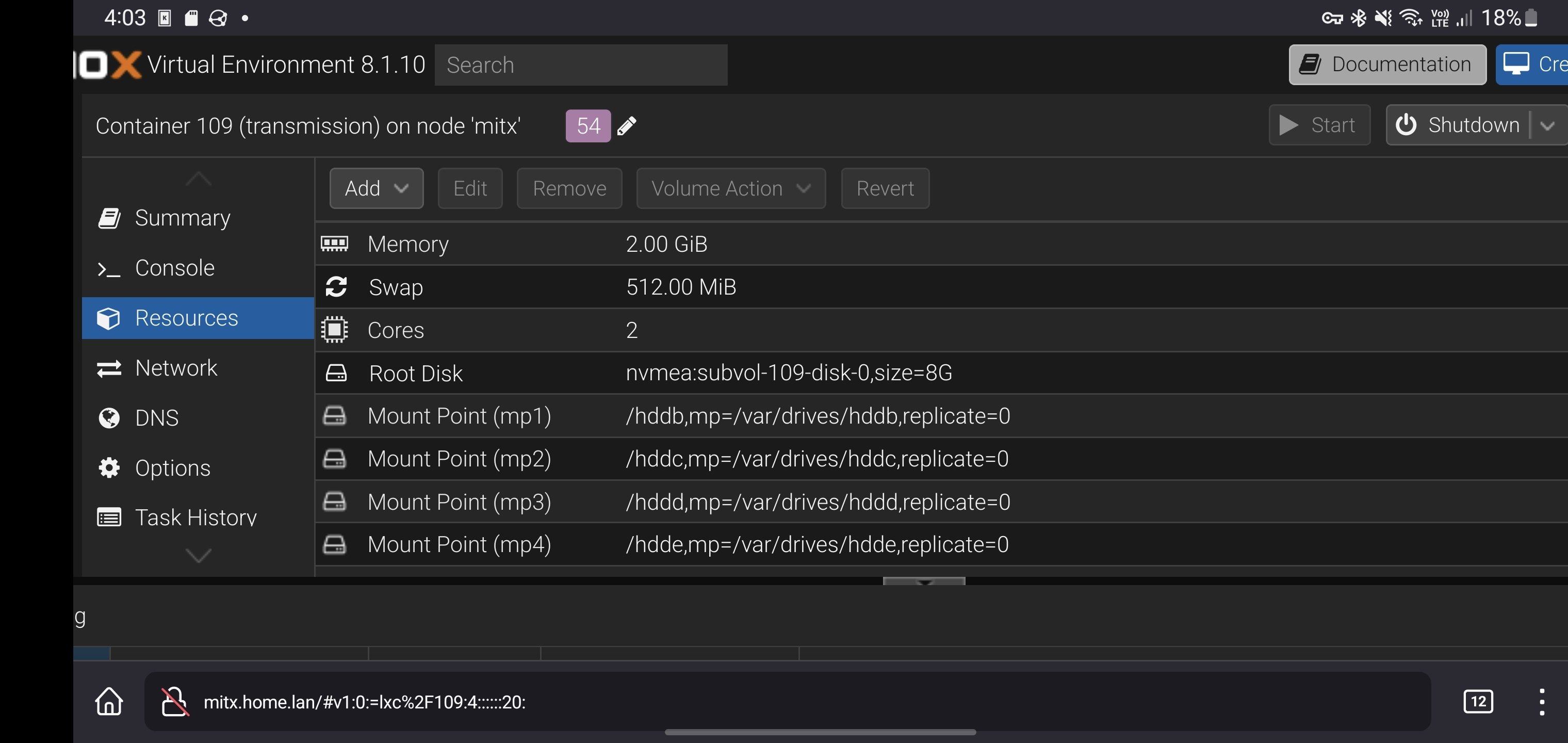

From my Erying ES system:

My services are quite small (static website, forgejo and a couple more services) but see no performance issues.